Navigating The Flow: Ensuring Accuracy And Reliability In Connecting Water Sensors To Cloud Platforms

By Jason Morris, Product & Marketing Manager – Digital & Metrology ANZ, Xylem

Obtaining data is the critical first step to more effectively managing pump stations, sumps, and other critical infrastructure. From flow rates to pressure to water quality and more, the integration of water sensor data with cloud platforms ensures that all data is centralized and accessible. However, achieving accuracy and reliability in this convergence is not without its challenges.

Issues such as signal interference, sensor drift, data latency, and more can compromise the integrity of the data stream. Moreover, the sheer volume of data generated by multiple sensors operating in tandem necessitates robust mechanisms for data validation, synchronization, and error correction. As such, it is critical to select sensors and a cloud platform provider that have the combination of features that can ensure accurate and reliable data.

The Importance Of Integration

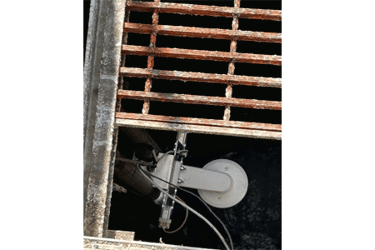

Water sensors and other instrumentation serve as the frontline observers, capturing real-time data on various parameters such as flow, level, pressure, pH levels, temperature, turbidity, and dissolved oxygen. This data is invaluable for a wide range of applications, including sewer and potable water networks, treatment plant operations, environmental conservation, and public health.

Cloud platforms provide the infrastructure for scalable storage, processing, and analysis of this sensor data. By seamlessly connecting sensors to the cloud, organizations can leverage advanced analytics, predictive modeling, and decision support systems to drive informed actions and policymaking.

Integration Challenges

In the water utility network, where environmental conditions can be harsh and remote locations are common, ensuring the fidelity of sensor data poses significant hurdles. For example, the increasing number of Wi-Fi, radio frequency, and cellular transmissions can cause signal interference, which can compromise data integrity. In addition, over time sensor drift can occur, in which instrument performance shifts away from the initial calibration. Water utilities also need to be mindful of data latency issues, which can be caused by bandwidth limitations, transmission protocols, and geographical distance.

All of these reduce the accuracy of data. Moreover, the sheer volume of data generated by multiple sensors operating in tandem necessitates robust mechanisms for data validation, synchronization, and error correction.

Strategies For Success

To overcome these challenges and realize the full potential of connecting water sensors to cloud platforms, water utilities need to implement a multifaceted approach:

1. Sensor Selection and Calibration. Any given sensor must be rugged, reliable, and well-suited to the specific environmental conditions. Depending on the sensor measurement element, it may be necessary to perform regular calibration to maintain accuracy and consistency over time.

2. Data Transmission Technologies. Implementing advanced data transmission technologies such as LoRa (long range) radio and NB-IoT (narrowband Internet of Things) ensures efficient and reliable communication between sensors and cloud platforms. These technologies offer low-power, long-range communication capabilities, ideal for transmitting data from remote or challenging environments.

3. Data Transmission Protocols. Water utilities should choose instrumentation with resilient communication protocols that emphasize data integrity and dependability, particularly in remote or adverse conditions. This should include encryption and authentication mechanisms that can secure data transmission and prevent tampering or interception.

4. Edge Computing Capabilities. Utilities can reduce latency and bandwidth requirements by leveraging sensors with edge computing technologies. Such sensors can perform preliminary data processing and analysis at the sensor level, which reduces the amount of data that needs to be sent over the network.

5. Anomaly Detection Algorithms. When dealing with large volumes of data, anomalies can occur at any time with one or more sensors. Anomaly detection algorithms can identify and flag potential data inconsistencies in real time. From there, operators can determine if the sensor requires maintenance or calibration or if the anomaly is an isolated incident.

6. Cloud Infrastructure Optimization. Cloud architectures must be scalable, fault-tolerant, and capable of handling large volumes of sensor data. In addition, the software must offer native services for data ingestion, storage, and processing, optimizing resource utilization and minimizing costs.

7. Collaborative Ecosystems. Lastly, it is important to ensure that any cloud system is compatible with a range of sensor manufacturers. By fostering collaboration between cloud service providers, sensors, researchers, and policymakers, utilities are better able to drive innovation and develop best practices. This can be done by establishing data-sharing protocols and standards to facilitate interoperability and cross-domain insights.

As water utilities leverage sensors and cloud platforms to manage infrastructure, they must be diligent in their pursuit of accuracy and reliability. By combining technological innovation, rigorous standards, and collaborative partnerships with suppliers, utilities can unlock the potential of this collaboration and ensure that the flow of data remains not just a stream of information but a river of actionable insights to drive positive change.

This article is Part 3 of a four-part series on improving pump station management in wastewater systems.